Here is the end result and you can read more if you think its interesting.

Why I find this interesting

Firstly, because of its relevance in things like virtual-production-like techniques used in filming The Mandalorian and secondly I think that perspective correction offers a zero barrier opportunity for a single viewer to experience mixed reality.

An idea of application

In my head I am picturing a gallery filled with mostly traditional art but when you walk by one frame it has a screen with a 3D scene portrayed inside it. As you walk by, the screen morphs to display the scene so that it looks approximately correct from the angle you are looking at it as though the screen were a window to another world rather than a flat image.

Some limitations to this method are:

- you can only have one primary viewer at a time (fine with the current social distancing)

- the lack of stereoscopy can be disorienting (the perspective correction is averaged between the eyes)

How to UV hack it

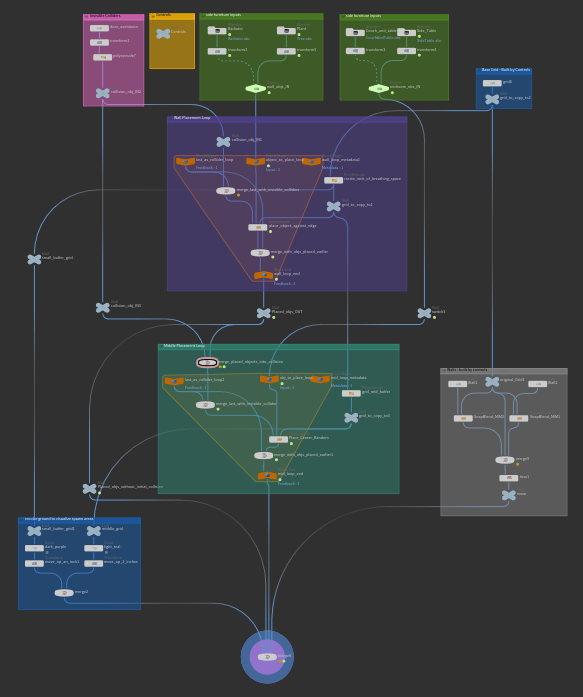

Here is a basic overview of how to achieve this using touch designer. I will not be going over every button click in detail just the main concepts.

You will need:

an Xbox Kinect and adapter for PC

TouchDesigner

a human head (could be yours)

a general understanding of how UVs work

Step 1: Setting up the virtual scene

Add a box with the dimensions of your screen.

Separate into two objects one with just the 'screen object' itself and one with the 'box back'.

The 'box back' will eventually be replaced with whatever you want to be viewed through the screen but for now it will be a stand-in for us to test whether perspective correction is working

Step 2: Calibration

Set up the kinect with touch designer and isolate the head and a hand input. The hand will be used for calibration.

Create a place holder object and input the hand location to it.

The object should mirror your movements in virtual space.

Place your hand on the corner of the computer screen and match transforms of the 'box back' and 'screen object' to it.

Then do the same for another corner.

Step 3: UV hacking

Once the screen is properly calibrated in the virtual world add a camera and reference your head location to the transform of the camera. Then put the 'screen object' as the look at object for the camera.

only include the 'box back' in the renderable objects. Do not include the screen

Then project UVs onto the screen object from the camera perspective

You will need to subdivide your 'screen object' before you do this because UV's are linearly interpreted between vertices.

Then set up another camera that is pointed directly at the 'screen object'

Then output that render.

And that's it.